Why Pentests Break Engineering Workflows (And What Actually Works Instead)

As security becomes a business requirement, traditional pentesting often becomes a blocker. Reports satisfy auditors but overwhelm engineering, eroding trust, stalling remediation, and slowing deals. This article explains why this happens and what’s required to close the gap

You don’t run a pentest to cause chaos.

You run one to gain confidence.

Maybe there’s a SOC 2 deadline looming. Maybe an enterprise buyer is asking for proof. Maybe you just want to know whether your system would hold up under a real attack. Instead of clarity, what often follows is weeks of unplanned work, frustrated engineers, and a lingering feeling that security hasn’t actually improved.

The problem usually isn’t a lack of care or capability on your team. It’s that most pentests simply aren’t designed for how engineering teams actually work. A bad pentest rarely looks bad at first. It looks like any other pentest. You’re handed a fancy report, packed with charts, recommendations, findings, severity ratings, and enough screenshots to suggest real effort went into the work. You start skimming not to understand risk, but to gauge impact. How much time will this take? How disruptive will it be to the team? A pentest meant to validate your security posture starts to feel less like reassurance and more like something you need to manage around.

Most pentests are still designed for auditors, not the engineers who have to fix the findings. Auditors care about coverage and SLAs. You care about actual risk and whether it is worth the trade-off in development time.

Where Pentests Break Down in Practice.

It's frustrating when a pentest upends your team's schedule. Mitigation can feel impossible with hard-set deliverables and a team that's already stretched. There are a few primary reasons why getting a pentest can lead to schedule mayhem:

1. Security & Severity Inflation breaks trust.

When pentests are not thorough or comprehensive, and findings aren’t properly vetted by a pentesting provider, or when most findings come back labeled High or Critical, engineers start questioning the results. Teams lose the ability to distinguish between urgent findings and those that are merely noisy, between real security gaps and just “nice to haves”.

The downstream effects are corrosive. Engineering teams start to tune out security reports because everything feels equally catastrophic. Backlogs fill with “critical” issues that never get addressed, not because teams are careless, but because the signal-to-noise ratio is off.

Over time, this damages security credibility. Developers become skeptical of security guidance, including pentest results. Teams begin to question the value of testing altogether. What should be a decision-making tool becomes just another document that gets skimmed, archived, and forgotten.

“When everything is a critical priority, nothing is a priority.”

— VP of Engineering, MarTech SaaS

Once trust in security reports breaks down, teams stop seeing any value in these reports and start treating them as a distraction

The following is a popular Reddit post that explains engineering teams' frustration with reports dumped on them with “ASAP” titles slapped to it

2. Lack of Trust Breaks Ownership

When your team feels that the pentest report is useless, no one takes ownership of fixing vulnerabilities. It is viewed as a waste of time compared to the “actual” work of building revenue-generating new features or fixing bugs to avoid churn. When vulnerabilities aren’t tied clearly to owners, they will bounce between teams, stall in Jira, or get “fixed” without addressing the root cause. Remediation becomes fragmented and slow. Vulnerabilities can be noted as accepted risk without the engineer responsible for the ticket understanding the business implications.

Researchers examined distributed software projects with low trust and found that when trust was lacking:

- Team members became self-protective rather than collaborative

- Individuals began to prioritize personal goals over group goals

- Mutual feedback, information exchange, and open communication decreased

- There was increased conflict and more managerial monitoring

- Productivity and quality declined

This is critical because self-protection and siloed behavior are direct opposites of ownership. People stop feeling responsible for collective outcomes when they don’t trust others to support them or share risk. This is crucial because security is actually a team sport between security, compliance, software, IT, and DevOps teams.

3. The one-way handoff

The report gets delivered, and the pentesting support team disappears. Your team is left trying to interpret findings in isolation, infer intent, guess exploit paths, and apply surface-level fixes that may not resolve the underlying issues. Worse, without context or clarification, teams can accidentally introduce new risks that go unnoticed. Rather than acting as the start of an improvement loop, the pentest becomes a dead-end artifact.

In several online discussions about pentest reporting, engineers and testers alike note that most outputs only document vulnerabilities, with little to no interactive walkthrough, forcing teams to “just guess how to fix it” or “interpret without help.”

In a heated LinkedIn thread, one security leader puts it best

On Reddit, practitioners describe the struggle to map reports to real remediation because there’s no structured support or dialogue after delivery.

The result is a cycle where engineering teams treat security findings as a checklist to close rather than as ownership-worthy issues to understand and fix well, because the process produces a report but no shared sense of purpose or clear learning opportunity.

How Most Teams End Up Here (Even With the Best Intentions)

Most pentests start for the same reasons:

A SOC 2 audit is approaching.

You want to “do security right.”

You’re moving upmarket.

An enterprise deal is on the line.

As a product matures, there comes a crucial turning point where security stops being a background concern and becomes a hard business requirement. This is natural, but often the sheer amount of associated work can be unexpected. One of the common ways this happens is landing a particularly picky enterprise customer. Suddenly, security standards requirements arise, and engineers are responsible for security controls, documentation, and defensibility on top of their existing responsibilities.

That burden often lands squarely on engineering.

New processes introduce real overhead; much of it is disconnected from day-to-day product decisions. Questioning scope or priorities rarely changes the outcome. The deal is on the line, and the deadline keeps moving closer.

At the same time, enterprise security teams operate with a strict zero-trust mindset. Approving a vendor is a personal risk for them, so due diligence is non-negotiable. They expect comprehensive testing across the real attack surface:

- Authenticated applications

- Internal networks

- Multi-tenant environments

- Sometimes, even marketing sites

Narrow or black-box-only tests rarely pass muster, and when testing falls short, deals stall or collapse.

“We won’t be moving forward. Last year’s pentest was limited to black-box testing, and the B2C application was out of scope again this year.”

— CISO, Global Relocation Firm

This is the inflection point most growing teams eventually reach. Quick fixes stop scaling. Security has to mature, and risk must be understood, managed, and defensible.

What Drives Engineering-Grade Pentesting

Frameworks That Guide Thinking

Quality pentesting isn’t about how many tools are run. It’s about how findings are produced, reviewed, and validated.

That requires:

- Industry standards that drive pentesting methodologies: Industry frameworks such as OWASP, SANS, and NIST should u as practical guardrails, not compliance artifacts. Used correctly, they ensure consistent coverage, reduce blind spots, and make results comparable over time. They create a shared baseline for what “good testing” actually means across engagements. This also helps enterprise stakeholders “trust” the pentest report.

- Pentesting methodologies drive the pentest: A strong methodology shapes the entire engagement: what gets tested, how depth is determined, and how evidence is validated. It prevents testing from becoming a collection of ad hoc techniques and instead ensures systematic coverage aligned with real-world attack patterns and the client’s technology stack.

- Competent testing teams execute the pentest: Methodology alone isn’t enough. Skilled testers apply judgment, adapt techniques to the environment, and understand how modern systems actually fail. They don’t just follow checklists; they reason about architecture, identify meaningful attack paths, and prioritize findings based on real exploitation potential.

- Calculated risk for each vulnerability based on the real-life risk, not opinions: Risk should be calibrated using context, not generic severity labels. CVSS provides a baseline, but real prioritization requires assessing exploitability, likelihood, attacker value, and environmental impact. Asking “Can this actually be exploited here?” and “If it was actually exploited, what kind of business damage are we looking at?” turns findings into decision-making tools rather than abstract warnings.

- Clear, actionable report that maps to software development workflows: Reports must translate security findings into engineering work. That means mapping issues to real components, clearly explaining the root cause, and providing remediation guidance that fits existing workflows (tickets, backlogs, CI/CD). Without that bridge, findings remain theoretical rather than becoming shippable improvements.

Clear ownership of findings and timely support when engineers need it: the “pentesting” relationship doesn’t end at report delivery. Effective pentesting includes accessible technical support when engineers validate, implement, or question remediation. That means testers who can explain exploit paths, clarify intent, and collaborate on fixes, reinforcing trust and enabling teams to confidently own outcomes.

Breaking Bottlenecks in Reporting and Barriers to Accelerate Remediation

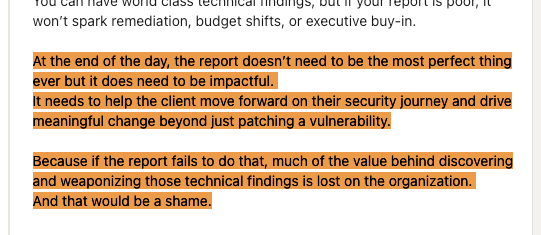

A lot of pentest reports fail not because the findings are wrong, but because the format is unusable. A massive PDF is nobody’s idea of a practical engineering input. Manually copying vulnerabilities into Jira tickets wastes time, introduces errors, and breaks traceability between the report and the bug-tracking system.

.png)

Engineering-friendly reports behave like real engineering artifacts. They provide clear impact, reproducible steps, and concrete remediation guidance that teams can act on immediately. But effective reporting also recognizes that one document cannot serve every audience well. Engineers, executives, auditors, and customers each need different levels of detail and framing.

That’s why high-quality programs separate outputs by intent:

- Internal reports optimized for engineers, focused on root cause, exploitability, and implementation guidance

- External reports structured for audits, compliance, and customer assurance without additional translation work

When reporting is designed this way, engineers know exactly what to do next, and audits no longer require costly rework.

Making Remediation a Collaborative Phase

Finding issues is rarely the hard part. Fixing them correctly is.

Engineering-first pentesting treats remediation as an ongoing collaboration, not a one-way handoff. With direct access to the testers who uncovered the issues, engineering teams can walk through complex findings, validate assumptions, and avoid surface-level fixes that only mask deeper problems.

This collaboration enables teams to make deliberate, informed decisions about risk:

- What must be eliminated

- What can be mitigated

- What belongs in another architectural layer

- What risk is consciously accepted

Retesting is built in, not bolted on, so fixes actually hold under scrutiny. When remediation works this way, pentests stop creating operational churn and start producing durable, compounding security improvements.

Final Thought

Pentests don’t derail engineering teams because security is hard. They derail teams because the work isn’t designed to fit how engineering actually operates. When pentesting respects workflow, prioritization, and validation, it stops feeling like a blocker and starts functioning like infrastructure.

.avif)