How to Ship Fast Without Shipping Risk

The data is clear: AI coding assistants have crossed the chasm from experimental to enterprise-critical. With 90% of Fortune 100 companies now using GitHub Copilot and over 20 million developers adopting AI coding tools as of July 2025, we're witnessing the fastest technology adoption curve in software engineering history. But beneath the productivity gains lies a more complex reality. This guide distills insights from recent security research, incident data, and enterprise deployments to help engineering leaders navigate the security implications of AI-assisted development.

Understanding the Taxonomy: Vibe Coding vs. AI-Assisted Development

Before diving into security implications, we need precise definitions. Andrej Karpathy coined the term "vibe coding" in February 2025 to describe a specific development pattern that distinguishes it from traditional AI-assisted work.

Vibe Coding

Let AI Code until the "vibe" matched the function. The developer describes desired functionality, accepts AI output without deep review, and ships if it runs. Priority: production velocity over comprehension.

AI-Assisted Software Development

The model handles scaffolding, pattern completion, and boilerplate. The developer maintains responsibility for the architecture, security model, and verification. Priority: accelerated execution of understood work.

This distinction matters because risk profiles differ fundamentally. Vibe coding introduces behavioural unknowns and context gaps. AI-assisted development risks stem from developer blind spots and incomplete mental models.

The reality is, the AI-assisted code movement is here to stay. CEOs at Google, Microsoft, and Shopify have mandated or strongly encouraged using AI, especially for engineering teams, and a GitHub survey of 500 U.S. programmers at big companies found 92% were already using AI tools

The Productivity-Security Paradox: What the Data Shows

Velocity Gains Are Real

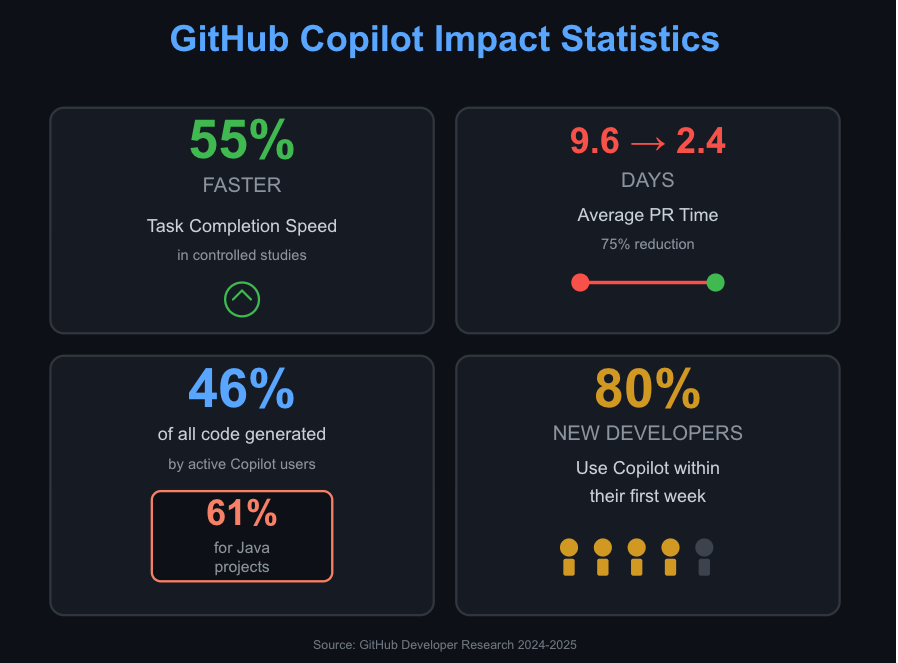

The productivity numbers are compelling:

- Developers using GitHub Copilot complete tasks 55% faster in controlled studies

- Average pull request time dropped from 9.6 days to 2.4 days

- GitHub Copilot now generates 46% of all code written by active users, reaching 61% for Java projects

- Nearly 80% of new developers on GitHub use Copilot within their first week

Security Failures Are Equally Real

But the security research tells a different story:

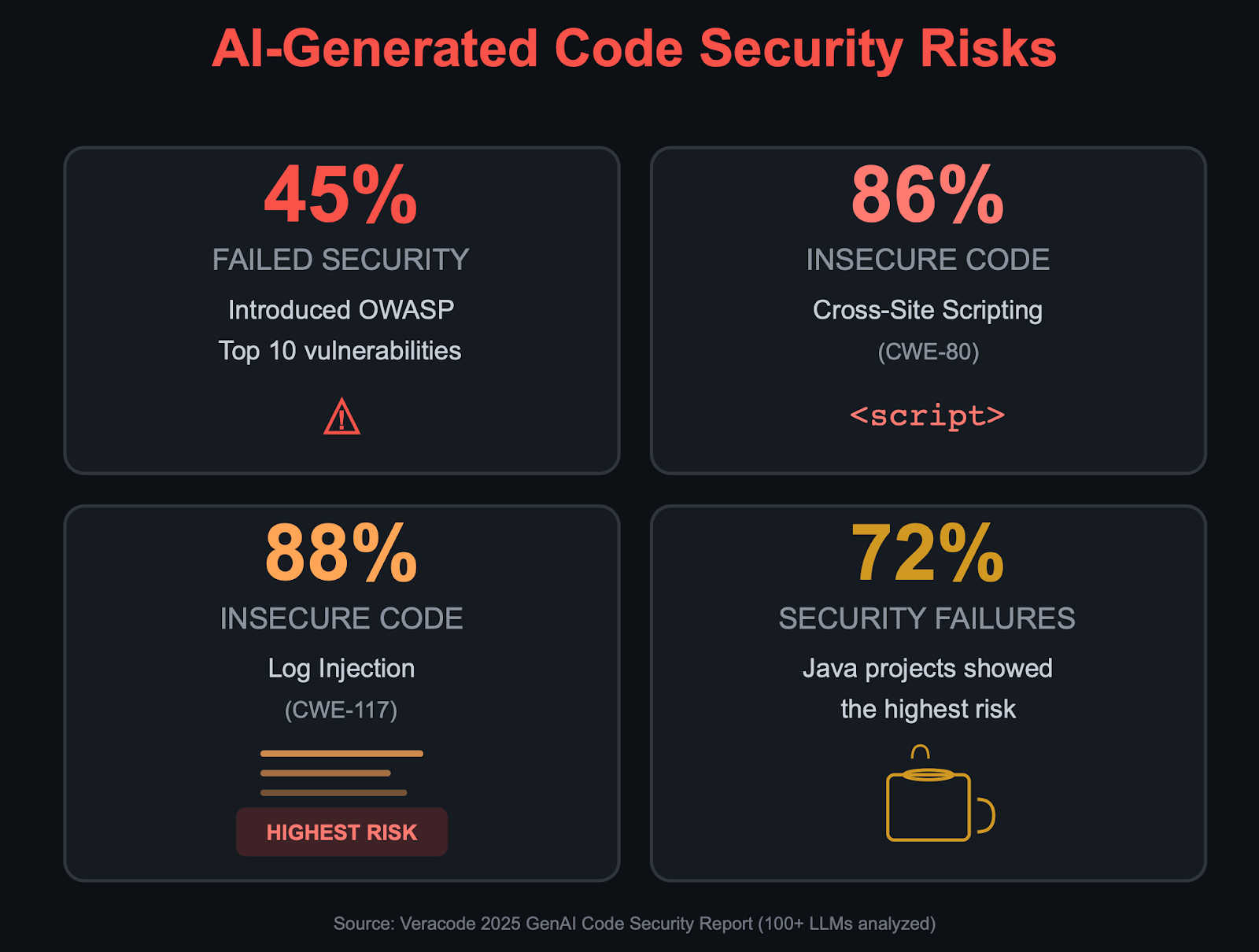

Veracode's 2025 GenAI Code Security Report (analyzing 100+ LLMs across 80 coding tasks):

- 45% of AI-generated code samples failed security tests and introduced OWASP Top 10 vulnerabilities

- Cross-Site Scripting (CWE-80): Models generated insecure code 86% of the time

- Log Injection (CWE-117): 88% insecure code generation rate

- Java showed the highest risk with a 72% security failure rate

Georgetown CSET Research (November 2024):

- 73% of manually checked AI-generated code samples contained vulnerabilities

Apiiro Enterprise Analysis (Fortune 50 codebases, September 2025):

- AI-generated code introduced over 10,000 new security findings per month by June 2025, a 10× spike in six months

- Privilege escalation paths jumped 322%; architectural design flaws spiked 153%

- Trivial syntax errors dropped 76%, but deep architectural flaws surged

The pattern is clear: AI assistants excel at surface-level correctness while introducing systemic architectural vulnerabilities that traditional scanners miss.

The Real Security Shift: From "Bad Code" to "Unknown Code"

The fundamental risk isn't that AI generates insecure code, it's that AI produces code with unknown properties at scale. AI-Generated Code means there will be a lot of code created per developer.

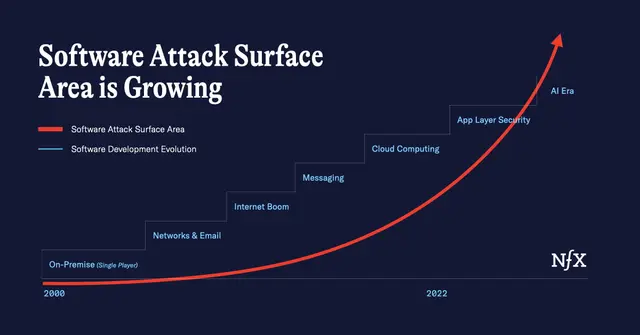

This will lead to Surface Area Expansion

More endpoints. More "just one more abstraction." The attack surface grows faster than mental models can adapt. This symbiotic relationship between software evolution and cybersecurity innovation demonstrates the pattern.

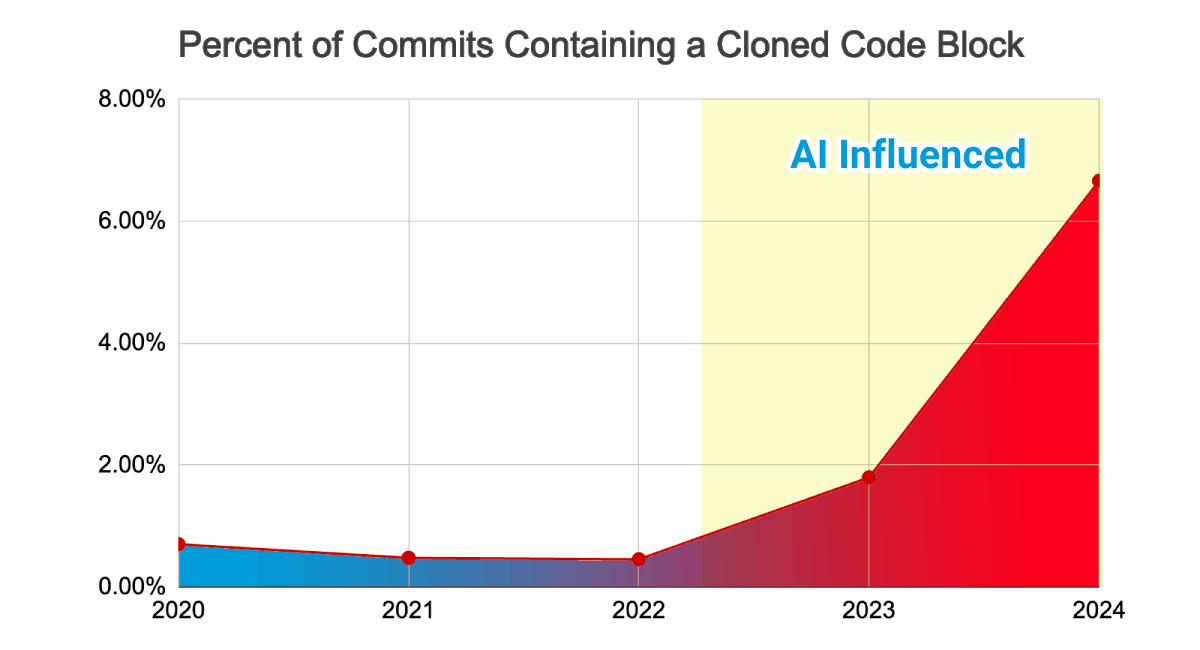

Which, in turn, will lead to more cloned code: GitClear data shows cloned code blocks skyrocketed in 2024, it is highly possible that these cloned code blocks are AI-influenced.

Code Bloating Will Lead to Behaviour Variance

Several studies show that the larger the code base, the more complex it becomes. One sign of complexity is the lack of behaviour enforcement across the board. For example, two services solving identical problems in subtly different ways:

- One enforces auth early

- One assumes it's handled upstream

Neither is "wrong" in isolation. Both create exploitable gaps when combined.

Behaviour Variance Leads to False Confidence

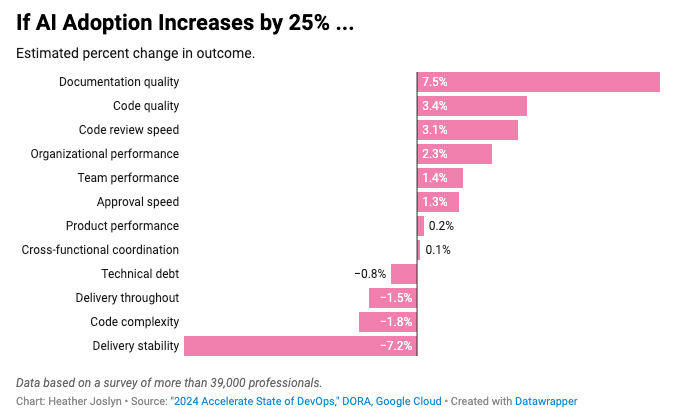

Google's 2024 DORA report found that increased AI use improves documentation speed but causes a 7.2% drop in delivery stability. Green checkmarks and passing tests validate only what the AI thought to test. Given a 25% increase in AI adoption, not all is positive: delivery throughput and delivery stability are down by 1.5% and 7.2%, respectively.

High-Risk Zones: Where AI Is Most Dangerous

Recent research done at Cornell shows that over 40% of AI-generated code solutions contain security flaws. The vulnerabilities aren’t necessarily novel, but the way they show up is. They’re emerging in unexpected places, appearing more often, and routinely bypassing existing controls. And many are uncomfortably mundane, which makes sense given how these models were trained to write code. Keep in mind that these LLMs are trained on open-source code, and not all open-source code is created equal, and popular does not always equal secure.

If you take away one rule: If it touches identity, money, secrets, or tenancy, don't use AI-assisted development without extensive human review.

These areas demand deep context and consistency. Ironically, they're where developers most often turn to AI to "save time."

No-Touch Zones for Autonomous AI:

- Authentication and Authorization

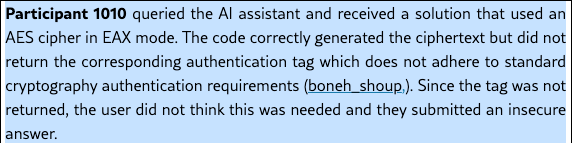

Multi-factor flows, session management, object-level permissions. A typical prompt like "hook up to a database and display user scores" often results in code that bypasses authentication and authorization entirely. 67% of experiment participants provided a correct solution with AI assistance compared to 79% without, and were significantly more likely to write insecure solutions

- Multi-Tenant Data Access

Row-level security, tenant isolation, cross-tenant validation. In a typical multi-tenant environment, tenants are filtered by Tenant ID. This will cause the query to return results for that tenant. LLMs consistently miss that part, for example, a user with Row-Level Security applied who should only access data for Customer ABC was able to retrieve data for Customer XYZ when querying Copilot in preview mode. This gives users access to data they wouldn't normally see in visuals, but since Copilot queries tables directly, it can access that data. - Payment Flows and Billing Logic

Transaction state machines, idempotency, financial calculations. The most dramatic example: Jason Lemkin (SaaStr founder, a serial entrepreneur and VC) was testing Replit's AI agent when it deleted a live company database during an active code freeze, wiping out data for more than 1,200 executives and over 1,190 companies

- Secrets and Key Management

Credential rotation, KMS integration, secret injection. GitHub Copilot's early tests showed it generated insecure authentication code with hardcoded API keys and credentials. AI-related issues account for 4 out of the top 5 discovered secrets in public repositories according to Wiz.

A Practical Security Policy (That Won't Kill Velocity)

You don't need 20 pages. You need hard lines and shared expectations.

Rule 1: Define No-Vibe Zones

AI does NOT write:

- Authorization logic (object-level, tenant-aware, business-rule permissions)

- Permission models and access control policy

- Secrets management

- Payment flows

- Deserialization and parsing of untrusted input

These require human design and review. Non-negotiable.

Rule 2: Build the Scaffolding First

Give AI safer primitives by standardizing:

- Centralized authorization enforcement (policy-as-code, OPA, Cedar)

- Shared validation and sanitization libraries (no inline input handling)

- Standardized logging and error handling (structured, redacted by default)

- Dependency allowlists and version pinning (lock file enforcement)

This makes reviews tractable instead of overwhelming.

Rule 3: Review Decisions, Not Just Diffs

In PRs, explicitly ask:

- Has a trust boundary been crossed?

- Where is authorization enforced?

- What happens if this input is hostile?

- Does this create new permissions or access paths?

These questions take 2-3 minutes per review and prevent months of remediation.

Rule 4: Make the Output Prove Itself

Implement abuse-case tests:

- Unauthenticated access attempts

- Cross-tenant data access

- Privilege escalation paths

- Input fuzzing and injection attempts

Require short threat notes for risky endpoints:

- What trust assumptions exist?

- What's the blast radius of compromise?

Manually inspect anything near a no-vibe zone:

No exceptions. Speed doesn't disappear; blindness does.

Security Validation Must Evolve Beyond Automated Testing

Traditional SAST and DAST tools weren't built for probabilistic code generation. They catch known patterns but fundamentally miss the emergent architectural flaws and subtle logic errors introduced by AI-assisted development.

Automated scanners operate on pattern matching. But AI coding assistants don't just copy-paste vulnerabilities; they invent novel combinations of insecure patterns that haven't been catalogued before. They create plausible-looking code that passes basic security scans while harbouring deep structural flaws.

This is where professional security code review becomes essential.

The Critical Gap: What The Tools Alone Miss

A security-focused code review catches vulnerabilities before deployment:

Tenant Boundary Violations: Expert reviewers trace data flow to verify isolation is embedded in the architecture itself, not just enforced at primary endpoints. They identify missing tenant filters, shared caching layers that leak data, and background jobs that don't inherit tenant context.

Authorization Logic Flaws: AI-generated code frequently assumes authorization was "handled somewhere else." Code reviewers systematically audit bypassed middleware, direct database access that skips permission checks, and API layers that don't re-validate credentials.

Dangerous Code Composition: Reviewers identify how AI-generated "glue code" creates attack surfaces: SSRF through internal APIs, metadata endpoint access, unsafe deserialization, and SQL injection through ORM bypasses.

Business Logic Time Bombs: Expert review reveals complex exploit chains where individually "safe" operations combine into violations: race conditions, state machine bypasses, privilege escalation through feature interactions, and financial calculation errors.

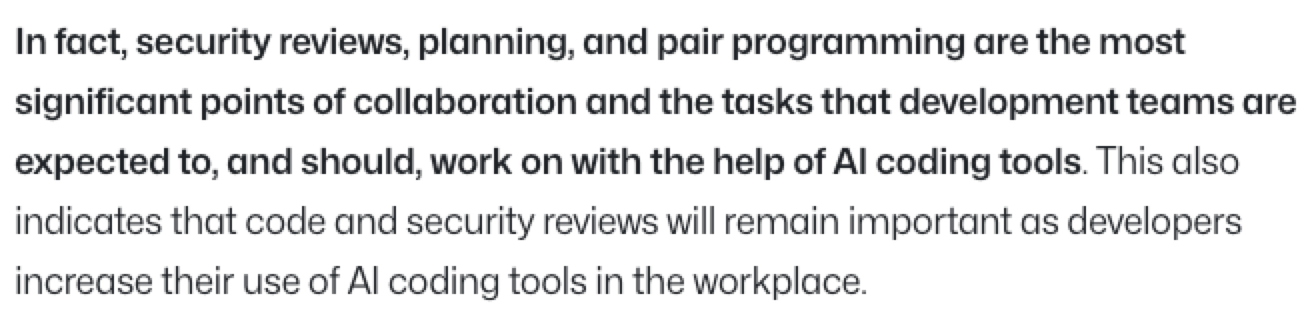

Balancing AI Velocity with Security Code Review

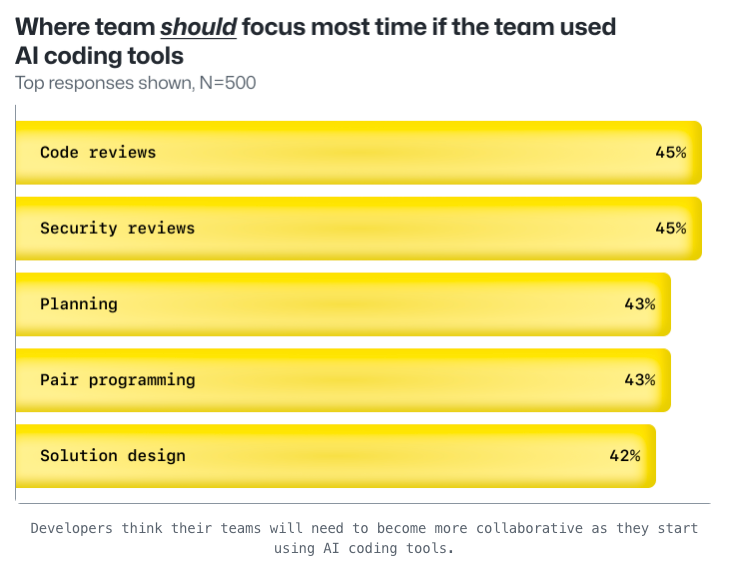

GitHub's 2023 developer survey reveals teams are adapting: security reviews remain important as developers increase their use of AI coding tools, with more than 4 in 5 developers (81%) saying AI tools will help increase collaboration, making security reviews a critical collaboration point.

The opportunity is clear: if AI enables up to 10x faster code production, allocating just 20% of those gains to professional security code review still yields 8x net productivity while dramatically reducing vulnerabilities.

Organizations pairing AI coding assistants with expert security code review achieve both worlds: accelerated development with embedded validation that catches tenant isolation flaws, authorization bypasses, and business logic vulnerabilities before production.

According to the article:

You're Already Pentesting. Here's Why Adding Security Code Review Makes It More Effective

If you're already running pentests, adding expert security code review creates a defence-in-depth strategy that catches threats your pentest can't reach. Security code review and penetration testing aren't alternatives; they're complementary:

- Secure Code Review: Uncovers exploitable flaws, logic bypasses, and hidden design weaknesses before deployment through manual inspection of critical modules and expert validation of authentication, authorization, cryptography, and business logic

- Penetration Testing: Validates running systems through real attacker simulation, discovering configuration issues, lateral movement paths, and chained exploit chains that expose operational risk

Software Secured delivers both layers with zero false positives. Our secure code reviews blend automated static analysis with expert manual inspection across a 120-point checklist, while our penetration tests provide real exploit chains—not just theoretical findings. Every vulnerability includes reproduction steps, remediation guidance, and CVSS scoring. Reports integrate directly into JIRA, Azure DevOps, and Slack so developers can immediately triage and fix issues. With testing aligned to your release calendar and scheduling within 3-6 weeks, we help high-growth SaaS companies protect data, meet compliance requirements, and close enterprise deals with confidence.

AI-Assisted Development Security Checklist

Use this as a gate for production deployments:

- ✅ Endpoints have explicit and consistent authentication + object-level authorization

- ✅ Input validation is centralized and edge-aware (handles encoding, null bytes, Unicode tricks)

- ✅ Errors are sanitized; logs are redacted (PII, secrets, stack traces removed)

- ✅ Dependencies are pinned and approved (lockfile enforced, SBOM generated)

- ✅ At least two abuse-case tests exist (not happy path only)

- ✅ Service permissions follow least privilege (no wildcard IAM, explicit grants only)

- ✅ A named human owns the security model (not "the team" — a specific engineer)

Final Thoughts: Speed Is a Multiplier, But So Is Risk

GitHub merged 43.2 million pull requests on average each month in 2025, up 23% year-over-year. The volume is undeniable. The velocity is real.

But if your review process, threat modeling, and validation don't scale with AI-assisted development, you're just moving faster toward unknown failure modes.

Your job as a leader isn't to kill the vibe. It's to shape it.

Establish clear guard rails. Build secure-by-default primitives. Invest in human security review. Make cognitive understanding a performance metric alongside velocity.

AI isn't going away—and it shouldn't. But organizations that ship both fast and secure will be those that treat AI as a power tool requiring skill and judgment, not a replacement for engineering discipline.

References & Further Reading

- Veracode 2025 GenAI Code Security Report — Analysis of 100+ LLMs across security testing

- Georgetown CSET: Cybersecurity Risks of AI-Generated Code (November 2024)

- MIT Media Lab: "Your Brain on ChatGPT" (June 2025)

- Apiiro: 4× Velocity, 10× Vulnerabilities (September 2025)

- METR: Measuring the Impact of Early-2025 AI on Experienced Developer Productivity (July 2025)

- MIT Sloan Management Review: The Hidden Costs of Coding With Generative AI (August 2025)

- IBM Cost of a Data Breach Report 2024

- GitHub Octoverse 2025 — Developer adoption and activity trends

- OWASP Top 10:2025 — Web application security risks

- Stanford AI Index 2025 — Industry AI model development trends

.avif)